本文参考:腾讯云云计算技术专栏-kubernetes系列教程(二十)prometheus提供完备监控系统

郑重其事:时区一定要调整,服务器或者虚拟机时区与浏览器时区不一致,会导致最后监控没有数据

$ ntpdate ntp.ntsc.ac.cn前言

在初次学习Kubernetes的时候,了解到对容器监控做的最好的是Prometheus,而当学习到DaemonSet的功能时,提到了,监控以及日志可以使用DaemonSet资源来部署,而作者本人也就是我,也尝试的使用DaemonSet部署了CAdvisor和node-exporter,更是扬言以后要自己写Prometheus的DaemonSet,还是我太年轻了。

当Kubernetes集群中真正使用Prometheus监控时,并没有想象的那么简单,涉及到了很多组件,和调试Dashboard,甚至需要去学习PromSQL,尽管将这些学会可以更自主化的去做自己想要的监控项。但也不是一朝一夕就可以完成的。

所以我一直在找参考链接中这样的文章,希望有一个项目集成了Prometheus的所有需要组件,并且Dashboard都已经调试好,拿过来部署就可以使用。

我使用的环境与参考中些许不一致

| Hostname | IP | Docker Version | Kubernetes Version |

|---|---|---|---|

| master1 | 192.168.1.11 | 19.03.8 | 1.18.1 |

| master2 | 192.168.1.12 | 19.03.8 | 1.18.1 |

| master3 | 192.168.1.13 | 19.03.8 | 1.18.1 |

| node | 192.168.1.14 | 19.03.8 | 1.18.1 |

Prometheus安装

在其中一台master获取源代码

$ git clone https://github.com/prometheus-operator/kube-prometheus.git建议将镜像提前下载好,用到的镜像比较多

$ grep -rn "image:" kube-prometheus/manifests/*.yaml kube-prometheus/manifests/setup/*.yaml | \

awk '{print $3}' | grep -v '^$' | sort | uniq

# 以下是需要用到的镜像

directxman12/k8s-prometheus-adapter:v0.8.3

grafana/grafana:7.4.3

jimmidyson/configmap-reload:v0.5.0

# 这个镜像是特别注意的,因为前缀是k8s.gcr.io,所以是pull不了的,改为bitnami/kube-state-metrics:1.9.8

# 之后使用docker tag改名为以下即可

k8s.gcr.io/kube-state-metrics/kube-state-metrics:v1.9.8

quay.io/brancz/kube-rbac-proxy:v0.8.0

quay.io/prometheus/alertmanager:v0.21.0

quay.io/prometheus/blackbox-exporter:v0.18.0

quay.io/prometheus/node-exporter:v1.1.1

quay.io/prometheus-operator/prometheus-operator:v0.46.0

quay.io/prometheus/prometheus:v2.25.0快速安装

$ kubectl apply -f kube-prometheus/manifests/setup/检查自定义资源

$ kubectl get crd -n monitoring | grep monitoring

alertmanagerconfigs.monitoring.coreos.com 2021-02-21T11:00:17Z

alertmanagers.monitoring.coreos.com 2021-02-21T11:00:17Z

podmonitors.monitoring.coreos.com 2021-02-21T11:00:18Z

probes.monitoring.coreos.com 2021-02-21T11:00:18Z

prometheuses.monitoring.coreos.com 2021-02-21T11:00:20Z

prometheusrules.monitoring.coreos.com 2021-02-21T11:00:21Z

servicemonitors.monitoring.coreos.com 2021-02-21T11:00:22Z

thanosrulers.monitoring.coreos.com 2021-02-21T11:00:24Z检查deployment、pod、svc

如果网络不好,可能要多等一会,因为要下载几个镜像

$ kubectl get all -n monitoring

NAME READY STATUS RESTARTS AGE

pod/prometheus-operator-cb98796f8-cncjf 2/2 Running 0 33m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus-operator ClusterIP None <none> 8443/TCP 33m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-operator 1/1 1 1 33m

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-operator-cb98796f8 1 1 1 33m组件安装

这波创建的资源和下载的镜像都是海量的,想想这个项目集成了很多内容,也就很正常了

$ kubectl apply -f kube-prometheus/manifests/

alertmanager.monitoring.coreos.com/main created

prometheusrule.monitoring.coreos.com/main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-datasources created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-statefulset created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-rules created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

servicemonitor.monitoring.coreos.com/prometheus-operator created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created检查node-exporter

$ kubectl get pods -n monitoring | grep node-exporter

node-exporter-2q5gt 2/2 Running 0 24m

node-exporter-54gzj 2/2 Running 0 24m

node-exporter-5b85d 0/2 Pending 0 24m

node-exporter-nx2kd 2/2 Running 0 24m检查核心组件和告警组件

$ kubectl get statefulsets.apps -n monitoring

NAME READY AGE

alertmanager-main 3/3 118m

prometheus-k8s 2/2 117m检查svc

$ kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.98.250.100 <none> 9093/TCP 119m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 119m

blackbox-exporter ClusterIP 10.105.23.253 <none> 9115/TCP,19115/TCP 119m

grafana NodePort 10.105.204.35 <none> 3000:32696/TCP 119m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 118m

node-exporter ClusterIP None <none> 9100/TCP 118m

prometheus-adapter ClusterIP 10.97.80.255 <none> 443/TCP 118m

prometheus-k8s ClusterIP 10.105.217.79 <none> 9090/TCP 118m

prometheus-operated ClusterIP None <none> 9090/TCP 118m

prometheus-operator ClusterIP None <none> 8443/TCP 158mPrometheus的使用

可以看到上方查看到的svc,只有grafana是nodeport的方式可以访问,如果想要去访问Prometheus也要修改端口的暴露方式

这里通过打补丁的方式修改

$ kubectl patch -p '{"spec":{"type": "NodePort"}}' svc -n monitoring prometheus-k8s

$ kubectl get svc -n monitoring | grep prometheus-k8s

prometheus-k8s NodePort 10.105.217.79 <none> 9090:30198/TCP 123m访问http://集群任意一台ip:30198,

如:

http://192.168.1.11:30198

http://192.168.1.12:30198

http://192.168.1.13:30198

http://192.168.1.14:30198

以上四个地址都可以访问,这里就不截图了。

Grafana的使用

# kubectl get svc -n monitoring | grep grafana

grafana NodePort 10.105.204.35 <none> 3000:32696/TCP 128mgrafana的端口已经是NodePort,所以可以直接访问

http://192.168.1.11:32696

http://192.168.1.12:32696

http://192.168.1.13:32696

http://192.168.1.14:32696

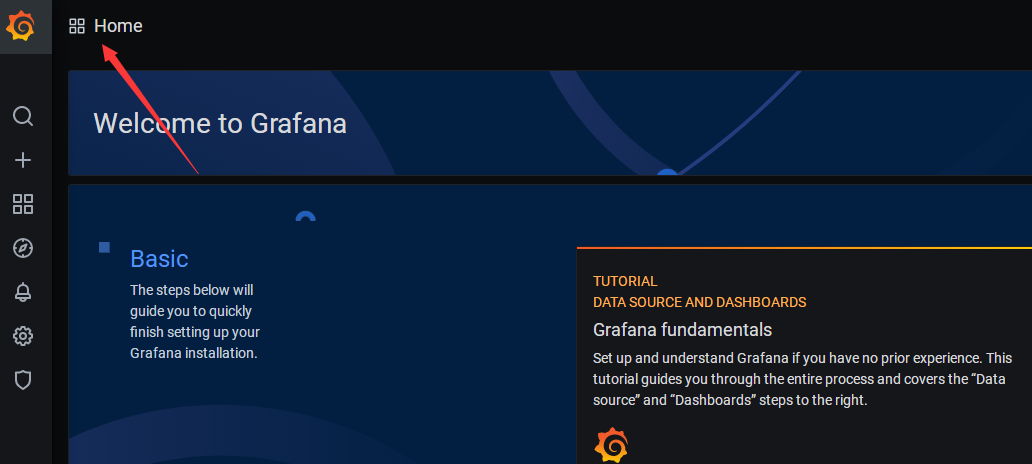

首次登陆,用户名admin,密码admin,因为安全起见,第一次登陆成功,还会提示设置新密码,自己设置好就可以进入界面了。

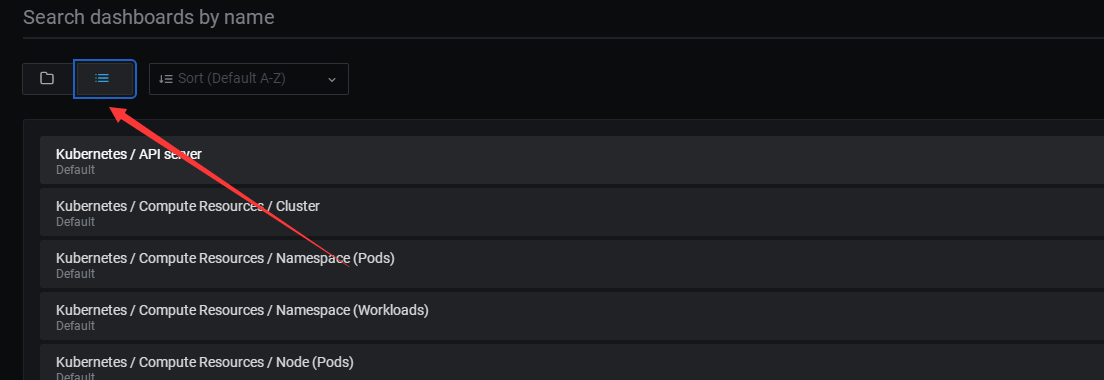

上图中下面的都可以选择去查看监控,我这里由于没有设置好时区,所以时间不同步,导致没有数据,就不放图了。