flannle网络中的web集群

实验环境

| 物理机 | 服务 | 备注 |

|---|---|---|

| 192.168.1.12 | docker(已安装)、etcd、flannel | server1 |

| 192.168.1.13 | docker(已安装)、etcd、flannel | server2 |

实验目的

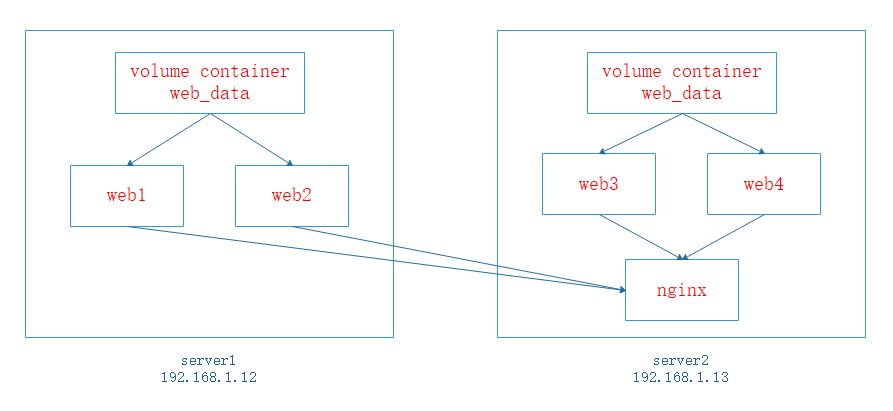

两台docker通过flannel/etcd做docker集群,使用httpd镜像进行部署web集群,并且web集群的数据来自于data-packed volume container(卷容器),页面内容为ChaiYanjiang,使用nginx容器做web集群代理,也就是通过访问nginx获取到web集群容器的页面,只能在容器内网访问

实验步骤

部署flannel

server1/server2

放行端口或者关闭防火墙

firewall-cmd --add-port=2379/tcp --permanent

firewall-cmd --add-port=2379/udp --permanent

firewall-cmd --add-port=2380/tcp --permanent

firewall-cmd --add-port=2380/udp --permanent

firewall-cmd --add-port=4001/tcp --permanent

firewall-cmd --add-port=4001/udp --permanent

firewall-cmd --reload设置默认防火墙策略

iptables -P FORWARD ACCEPT关闭沙盒

setenforce 0

vim /etc/selinux/config

# 修改SELINUX的值

SELINUX=disabled开启路由转发

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sysctl -pyum安装flannel和etcd

yum -y install flannel etcd配置etcd集群信息

server1

[root@server1 ~]# cp -p /etc/etcd/etcd.conf /etc/etcd/etcd.conf.bak

[root@server1 ~]# vim /etc/etcd/etcd.conf

# etcd存放数据目录,为了方便改成了和etcd_name一样的名字

ETCD_DATA_DIR="/var/lib/etcd/cyj1"

# 用于与其他节点通信

ETCD_LISTEN_PEER_URLS="http://192.168.1.12:2380"

# 客户端会连接到这里和 etcd 交互

ETCD_LISTEN_CLIENT_URLS="http://192.168.1.12:2379,http://127.0.0.1:2379"

# 节点名称,每台主机都不一样

ETCD_NAME="cyj1"

该节点同伴监听地址,这个值会告诉集群中其他节点

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.1.12:2380"

# 对外公告的该节点客户端监听地址,这个值会告诉集群中其他节点

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.1.12:2379"

# 集群中的主机信息

ETCD_INITIAL_CLUSTER="cyj1=http://192.168.1.12:2380,cyj2=http://192.168.1.13:2380"

# 集群token,建议修改一个,同一个集群中的token一致

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cyj"

# 新建集群的时候,这个值为new假如已经存在的集群,这个值为 existing

ETCD_INITIAL_CLUSTER_STATE="new"server2与server1修改的区别只在于ip和节点名称,所使用scp传送文件即可

[root@server1 ~]# scp /etc/etcd/* root@192.168.1.13:/etc/etcdserver2

[root@server2 ~]# vim /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/cyj2"

ETCD_LISTEN_PEER_URLS="http://192.168.1.13:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.1.13:2379,http://127.0.0.1:2379"

ETCD_NAME="cyj2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.1.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.1.13:2379"修改etcd启动项

默认刚才修改的内容,都是etcd启动后不会识别的,所以需要在启动文件中添加

server1

[root@server1 ~]# vim /usr/lib/systemd/system/etcd.service

# 在第十三行(ExecStart开头)末尾的前一个引号里面,添加如下

# 添加时不换行,空格隔开即可

--listen-peer-urls=\"${ETCD_LISTEN_PEER_URLS}\"

--advertise-client-urls=\"${ETCD_ADVERTISE_CLIENT_URLS}\"

--initial-cluster-token=\"${ETCD_INITIAL_CLUSTER_TOKEN}\"

--initial-cluster=\"${ETCD_INITIAL_CLUSTER}\"

--initial-cluster-state=\"${ETCD_INITIAL_CLUSTER_STATE}\"server2与server1一样,传送文件即可

[root@server1 ~]# scp /usr/lib/systemd/system/etcd.service \

root@192.168.1.13:/usr/lib/systemd/system/etcd.service 启动etcd服务

启动该服务时,会有其中一台阻塞,需要另一台启动之后,阻塞的一端才会重新启动,如果报错,或者启动不了,请仔细检查改过的两个配置文件

两台服务器同时启动

systemctl daemon-reload

systemctl start etcd检查集群状态,cluster is healthy

[root@server1 ~]# etcdctl cluster-health

member 1689fe155c673638 is healthy: got healthy result from http://192.168.1.12:2379

member ecf9066b8d70bc45 is healthy: got healthy result from http://192.168.1.13:2379

cluster is healthyhost-gw类型容器互通

server1

分配的ip地址池为123.123.0.0/16,使用/24的子网掩码为容器分裴ip网段

[root@server1 ~]# vim /root/etcd.json

{

"NetWork":"123.123.0.0/16",

"SubnetLen":24,

"Backend":{

"Type":"vxlan"

}

}将该文件导入etcd集群中

/usr/local/bin/network/config:该路径物理机并没有,是flannel的etcd内的

[root@server1 ~]# etcdctl --endpoints=http://192.168.1.12:2379 \

set /usr/local/bin/network/config < /root/etcd.json

{

"NetWork":"123.123.0.0/16",

"SubnetLen":24,

"Backend":{

"Type":"vxlan"

}

}更改flannel配置文件

server1

使得etcd可以识别这个文件

[root@server1 ~]# vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.1.12:2379"

FLANNEL_ETCD_PREFIX="/usr/local/bin/network"server2

与server1一致,除了ip

[root@server2 ~]# vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.1.13:2379"

FLANNEL_ETCD_PREFIX="/usr/local/bin/network"启动flanneld服务

server1/server2

systemctl enable flanneld

systemctl start flanneld这个时候物理机的路由表会生成一个由flanneld分配的集群内所有网段的路由

server1

在server1看到自己的网段97.0由docker0转发,server2的网段76.0由它自己的物理机ens33转发

[root@localhost ~]# ip r

default via 192.168.1.3 dev ens33 proto static metric 100

123.123.76.0/24 via 192.168.1.13 dev ens33

123.123.97.0/24 dev docker0 proto kernel scope link src 123.123.97.1

192.168.1.0/24 dev ens33 proto kernel scope link src 192.168.1.12 metric 100

192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1 server2

server也同理

[root@localhost ~]# ip r

default via 192.168.1.3 dev ens33 proto static metric 100

123.123.76.0/24 dev docker0 proto kernel scope link src 123.123.76.1

123.123.97.0/24 via 192.168.1.12 dev ens33

192.168.1.0/24 dev ens33 proto kernel scope link src 192.168.1.13 metric 100

192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1 docker0桥接

因为是使用了host-gw类型的backend,所以不会像vxlan一样去生成一个物理网卡用来桥接,它会直接通过docker0去进行转发

查看两个物理机的subnet信息,

server1

网关为123.123.97.1,MTU值为1500

[root@server1 ~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=123.123.0.0/16

FLANNEL_SUBNET=123.123.97.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=falseserver2

网关为123.123.76.1,MTU值为1500

[root@server2 ~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=123.123.0.0/16

FLANNEL_SUBNET=123.123.76.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=falseserver1

将查看到的MTU值与bip也就是分配到的网关,添加加到docker启动项中

[root@server1 ~]# vim /usr/lib/systemd/system/docker.service

# 第十四行末尾添加

--bip=123.123.97.1/24 --mtu=1500server2

[root@server2 ~]# vim /usr/lib/systemd/system/docker.service

# 第十四行末尾添加

--bip=123.123.76.1/24 --mtu=1500server1/server2

重启docker服务

systemctl daemon-reload

systemctl restart docker这时在查看物理机docker0的ip

server1

docker0已经成为了123.123.97.0/24网段的网关

[root@server1 ~]# ip a

...

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:17:be:e7:95 brd ff:ff:ff:ff:ff:ff

inet 123.123.97.1/24 brd 123.123.97.255 scope global docker0

valid_lft forever preferred_lft foreverserver2

docker0已经成为了123.123.76.0/24网段的网关

[root@server2 ~]# ip a

...

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9c:06:7a:d1 brd ff:ff:ff:ff:ff:ff

inet 123.123.76.1/24 brd 123.123.76.255 scope global docker0

valid_lft forever preferred_lft forever创建volume container做页面存储

server1/server2

创建卷容器映射文件

mkdir /html

vim /html/index.html

# 添加页面内容

ChaiYanjiang创建卷容器,将映射文件,挂载到容器内,两台创建的name一样也无所谓

docker create --name web_data --volume /html/:/usr/local/apache2/htdocs busybox启动web集群

server1

启动web1,指定使用卷容器web_data

[root@server1 ~]# docker run -itd -p 80 --name web1 --volumes-from web_data httpd

a6fcf2bcebfa45db30378ebd117c9dc661baddd6dc3b4aa25758ae9878f1200e查看web1容器ip,web1的IP为123.123.97.2

# 使用busybox镜像的容器来查看httpd的ip

[root@server1 ~]# docker run -itd --name bbox1 --network container:web1 busybox

35baff1d41f15208ca2129d8764913c92bf086da4d3650c4eccefdebcd00331c

[root@server1 ~]# docker exec -it bbox1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:7b:7b:61:02 brd ff:ff:ff:ff:ff:ff

inet 123.123.97.2/24 brd 123.123.97.255 scope global eth0

valid_lft forever preferred_lft forever启动web2,指定使用卷容器web_data

[root@server1 ~]# docker run -itd -p 80 --name web2 --volumes-from web_data httpd

ef07ed8876cb19165df94933b978366c5a8b811975597540fb3f623b331df2c6查看web2容器ip,web2的IP为123.123.97.3

[root@server1 ~]# docker run -itd --name bbox2 --network container:web2 busybox

bd31eef5d10cb17d4631bb86e92ecbf08fdd21b852b012cee1134a0fd9e57fef

[root@server1 ~]# docker exec -it bbox2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:7b:7b:61:03 brd ff:ff:ff:ff:ff:ff

inet 123.123.97.3/24 brd 123.123.97.255 scope global eth0

valid_lft forever preferred_lft forever查看映射端口

[root@server1 ~]# docker port web1

80/tcp -> 0.0.0.0:32768

[root@server1 ~]# docker port web2

80/tcp -> 0.0.0.0:32769访问端口,验证页面

[root@server1 ~]# curl 192.168.1.12:32768

ChaiYanjiang

[root@server1 ~]# curl 192.168.1.12:32769

ChaiYanjiangserver2

启动web3,指定使用卷容器web_data

[root@server2 ~]# docker run -itd -p 80 --name web3 --volumes-from web_data httpd

a4f10d930da28411e00453eddfcd5ad381416ed43f2d475edb920e2693e5d755查看web3容器ip,web3的IP为123.123.76.2

# 使用busybox镜像的容器来查看httpd的ip

[root@server2 ~]# docker run -itd --name bbox3 --network container:web3 busybox

ef12ca0c403cc702ccfc734d7c07816753f6f9f511bf562d74a46e7339ecde66

[root@server2 ~]# docker exec -it bbox3 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:7b:7b:4c:02 brd ff:ff:ff:ff:ff:ff

inet 123.123.76.2/24 brd 123.123.76.255 scope global eth0

valid_lft forever preferred_lft forever启动web4,指定使用卷容器web_data

[root@server2 ~]# docker run -itd -p 80 --name web4 --volumes-from web_data httpd

f5e01b969f28d699f642b3960d7b50ba96caef5393c3d9bacb97f601b0459c0f查看web4容器ip,web2的IP为123.123.76.3

[root@server2 ~]# docker run -itd --name bbox4 --network container:web4 busybox

09b84210c6592ad901645ca03690530226d265529426653c4dc51085b6e276b5

[root@server2 ~]# docker exec -it bbox4 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:7b:7b:4c:03 brd ff:ff:ff:ff:ff:ff

inet 123.123.76.3/24 brd 123.123.76.255 scope global eth0

valid_lft forever preferred_lft forever查看映射端口

[root@server2 ~]# docker port web3

80/tcp -> 0.0.0.0:32769

[root@server2 ~]# docker port web4

80/tcp -> 0.0.0.0:32770访问端口,验证页面

[root@server2 ~]# curl 192.168.1.13:32769

ChaiYanjiang

[root@server2 ~]# curl 192.168.1.13:32770

ChaiYanjiangnginxr容器反向代理

启动nginx容器

将nginx配置文件以managed volumes的方式挂载出来

[root@server2 ~]# docker run -itd --name nginx --volume nginx_conf:/etc/nginx nginx

7a5cc69c5535bf834ec6094b6592481e17562718a66e15420243e350a1dd2e1f在物理机修改配置文件

[root@server2 ~]# vim /var/lib/docker/volumes/nginx_conf/_data/nginx.conf

# 倒数第三行添加如下负载均衡模块

upstream httpserver {

server 192.168.1.12:32768 weight=1;

server 192.168.1.12:32769 weight=1;

server 192.168.1.13:32769 weight=1;

server 192.168.1.13:32770 weight=1;

}

[root@server2 ~]# vim /var/lib/docker/volumes/nginx_conf/_data/conf.d/default.conf

# 修改location模块,进行代理nginx.conf中的upstream模块

location / {

proxy_pass http://httpserver;

}重启nginx服务

[root@localhost ~]# docker exec -it nginx nginx -s reload查看nginx容器的ip

因为nginx中本身没有一些命令,只能通过joined网络的方式查看ip

[root@localhost ~]# docker run -itd --name bbox5 --network container:nginx busybox /bin/sh

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:7b:7b:4c:04 brd ff:ff:ff:ff:ff:ff

inet 123.123.76.4/24 brd 123.123.76.255 scope global eth0

valid_lft forever preferred_lft forever访问验证

[root@localhost ~]# curl 123.123.76.4

ChaiYanjiang