Docker跨主机容器间不同网络间通信

实验环境

| ip | 服务 | 备注(别名) |

|---|---|---|

| 192.168.1.12 | Docker/consul | server1 |

| 192.168.1.13 | Docker | server2 |

实验目的

server1:运行2个容器bbox1和bbox2

server2:运行2个容器bbox3和bbox4

net1(overlay网络):bbox1和bbox4使用通信

net2(overlay网络):bbox2和bbox3使用通信,并手动指定网段为10.10.10.0,且bbox2使用10.10.10.100/24地址,bbox3使用10.10.10.100/24地址

实验步骤

准备overlay环境

更改主机名

192.168.1.12

[root@localhost ~]# hostname server1

[root@localhost ~]# bash

[root@server1 ~]# 192.168.1.13

[root@localhost ~]# hostname server2

[root@localhost ~]# bash

[root@server2 ~]# server1

下载consul镜像

[root@server1 ~]# docker pull progrium/consul需要两台中将几个重要的端口号去放行

docker管理端口:2376/tcp 2376/udp

docker集群通信:2733/tcp 2733/udp

docker主机间通信:7946/tcp 7946/udp

docker overlay网络:4789/tcp 4789/udp

firewall-cmd --add-port=2733/tcp --permanent

firewall-cmd --add-port=2733/udp --permanent

firewall-cmd --add-port=2376/udp --permanent

firewall-cmd --add-port=2376/tcp --permanent

firewall-cmd --add-port=7946/tcp --permanent

firewall-cmd --add-port=7946/udp --permanent

firewall-cmd --add-port=4789/udp --permanent

firewall-cmd --add-port=4789/tcp --permanent

firewall-cmd --reload

firewall-cmd --list-port

2733/tcp 2733/udp 2376/udp 2376/tcp 7946/tcp 7946/udp 4789/udp 4789/tcpserver1

运行consul

[root@server1 ~]# docker run -d --restart always -p 8400:8400 -p 8500:8500 \

-p 8600:53/udp progrium/consul -server -bootstrap -ui-dir /ui

4fe0b24e05e3476d528e2881331128b7c69d185712bf4b69f3bb3e42a3f2f944将consul加入docker的启动文件中

[root@server1 ~]# vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// -H tcp://0.0.0.0:2376 --containerd=/run/containerd/containerd.sock \

--cluster-store=consul://192.168.1.12:8500 --cluster-advertise=ens33:2376

--cluster-store 指定 consul 的地址。

--cluster-advertise 告知 consul 自己的连接地址。将修改后的文件传送给server2

[root@server1 ~]# scp /usr/lib/systemd/system/docker.service root@192.168.1.13:/usr/lib/systemd/system/该文件修改后应该重载文件以及重启服务,因为consul在server1上,所以必须先启动server1的docker服务,然后在启动server2的docker服务

server1

切记第一台启动完成后,再去启动第二台,因为要启动consul的8500端口,所以启动会有点慢

[root@server1 ~]# systemctl daemon-reload

[root@server1 ~]# systemctl restart dockerserver2

[root@server2 ~]# systemctl daemon-reload

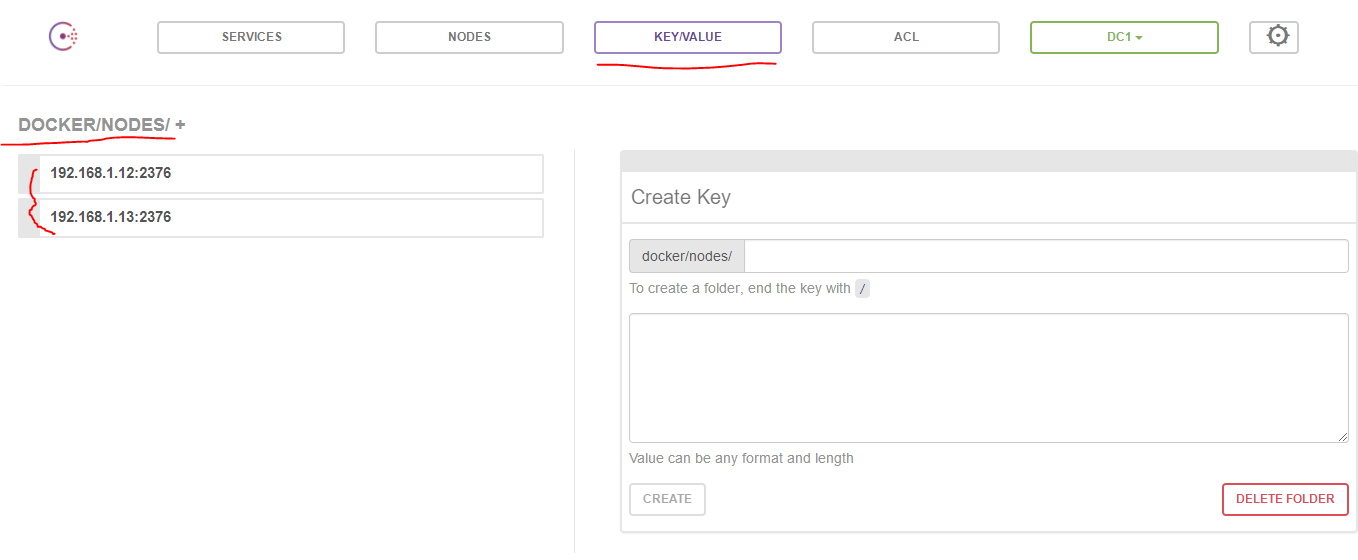

[root@server2 ~]# systemctl restart docker打开浏览器,访问http://192.168.1.12:8500

找到以下两个节点,也就是docker1和docker2的ip:2376docker管理节点

环境准备完成

创建overlay网络

根据实验要求创建两个overlay网络,一个net1,一个net2,net2指定网段10.10.10.0/24

# 开启网卡混杂模式

[root@server1 ~]# ifconfig ens33 promisc

# 创建net1的overlay网络

[root@server1 ~]# docker network create --driver overlay --attachable net1

fffcacc4f9fd648235775abdb936f86139badbf6545f3e88b7e3f9f2222d5c35

# 创建net2的overlay网络

[root@server1 ~]# docker network create --driver overlay --attachable --subnet 10.10.10.0/24 --gateway 10.10.10.1 net2

fd4f5a8769f2ffce0b08ea34934dbec371fa253ed7161cf591d4c9f6463f8373查看创建成功的两块网卡

[root@server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

f7486dea4d5d bridge bridge local

dc8bfdbda464 host host local

fffcacc4f9fd net1 overlay global

fd4f5a8769f2 net2 overlay global

ecbab8a758e6 none null local查看net2网络的网段

[root@server1 ~]# docker network inspect net2

"Subnet": "10.10.10.0/24",

"Gateway": "10.10.10.1"server1运行容器

运行容器bbox1

使用net1运行容器bbox1

[root@server1 ~]# docker run -itd --name bbox1 --network net1 busybox

cef7e37d81c927d6d7f47e7c8efb03cfa158642b87f9c8da85f06410eb9fc976

[root@server1 ~]# docker exec bbox1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:00:00:02 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.2/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

15: eth1@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever| 网卡 | ip |

|---|---|

| 12:eth0@if13 | 10.0.0.2/24 |

| 15:eth1@if16 | 172.18.0.2/16 |

第一次使用overlay网络会生成一个docker_gwbridge网卡,用来为使用overlay网络的容器连接外网

[root@server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

f7486dea4d5d bridge bridge local

66ac54f1fc4c docker_gwbridge bridge local

dc8bfdbda464 host host local

fffcacc4f9fd net1 overlay global

fd4f5a8769f2 net2 overlay global

ecbab8a758e6 none null local运行容器bbox2

使用net2运行容器bbox2,并指定ip为10.10.10.100/24

[root@server1 ~]# docker run -itd --name bbox2 --network net2 --ip 10.10.10.100 busybox

d7f85cb5fd17d68534b92844f8e4b25caa916bf48fe8295980df1e0ed10d13ce查看bbox2的ip

[root@server1 ~]# docker exec -it bbox2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

18: eth0@if19: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:0a:0a:64 brd ff:ff:ff:ff:ff:ff

inet 10.10.10.100/24 brd 10.10.10.255 scope global eth0

valid_lft forever preferred_lft forever

20: eth1@if21: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever| 网卡 | ip |

|---|---|

| 18:eth0@if19 | 10.10.10.100/24 |

| 20:eth1@if21 | 172.18.0.3/16 |

server2运行容器

运行容器bbox3

使用net2网络运行容器bbox3,并指定ip为10.10.10.10/24

[root@server2 ~]# docker run -itd --name bbox3 --network net2 --ip 10.10.10.10 busybox

0e7b3c89a1fb289e7abc88060e16a76feb54381525b57c16de770ec40340b0f0查看bbox3的ip

[root@server2 ~]# docker exec -it bbox3 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:0a:0a:0a brd ff:ff:ff:ff:ff:ff

inet 10.10.10.10/24 brd 10.10.10.255 scope global eth0

valid_lft forever preferred_lft forever

11: eth1@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever

failed to resize tty, using default size| 网卡 | ip |

|---|---|

| 8:eth0@if9 | 10.10.10.10/24 |

| 11:eth1@if12 | 172.18.0.2/16 |

这时server2运行的第一个容器,所以也会生成一个docker_gwbridge网卡,用来为使用overlay网络的容器连接外网

[root@server2 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

8c0fc16aa7d4 bridge bridge local

48dd88f07ff2 docker_gwbridge bridge local

fb1387ab7fc3 host host local

fffcacc4f9fd net1 overlay global

fd4f5a8769f2 net2 overlay global

a728ac0f7801 none null local运行容器bbox4

使用net1的overlay网络运行bbox4

[root@server2 ~]# docker run -itd --name bbox4 --network net1 busybox

686c5cc2acd2a61db709bc55be862b647abd82e8e5cd142bfff5e02f90928b8b查看bbox4的ip

[root@server2 ~]# docker exec -it bbox4 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:00:00:03 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.3/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

16: eth1@if17: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever| 网卡 | ip |

|---|---|

| 14:eth0@if15 | 10.0.0.3/24 |

| 16:eth1@if17 | 172.18.0.3/16 |

验证同一个overlay网络的连通性

server1

net1:bbox1 ping bbox4

[root@server1 ~]# docker exec bbox1 ping -c 2 bbox4

PING bbox4 (10.0.0.3): 56 data bytes

64 bytes from 10.0.0.3: seq=0 ttl=64 time=0.519 ms

64 bytes from 10.0.0.3: seq=1 ttl=64 time=2.098 ms

--- bbox4 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.519/1.308/2.098 msnet2:bbox2 ping bbox3

[root@server1 ~]# docker exec bbox2 ping -c 2 bbox3

PING bbox3 (10.10.10.10): 56 data bytes

64 bytes from 10.10.10.10: seq=0 ttl=64 time=0.695 ms

64 bytes from 10.10.10.10: seq=1 ttl=64 time=2.318 ms

--- bbox3 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.695/1.506/2.318 ms不同voerlay网络通信

实验要求中,需要bbox1可以与net2网络中的bbox2和bbox3通信

[root@server1 ~]# docker network connect net2 bbox1这时bbox1会多出一块网卡,用作连接net2网络

[root@server1 ~]# docker exec bbox1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:00:00:02 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.2/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

15: eth1@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever

22: eth2@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:0a:0a:02 brd ff:ff:ff:ff:ff:ff

inet 10.10.10.2/24 brd 10.10.10.255 scope global eth2

valid_lft forever preferred_lft forever| 网卡 | ip |

|---|---|

| 12:eth0@if13 | 10.0.0.2/24 |

| 15:eth1@if16 | 172.18.0.2/16 |

| 22:eth2@if23 | 10.10.10.2/24 |

验证

bbox1 ping bbox2/bbox3

[root@server1 ~]# docker exec -it bbox1 ping -c 2 bbox2

PING bbox2 (10.10.10.100): 56 data bytes

64 bytes from 10.10.10.100: seq=0 ttl=64 time=0.146 ms

64 bytes from 10.10.10.100: seq=1 ttl=64 time=0.081 ms

--- bbox2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.081/0.113/0.146 ms

[root@server1 ~]# docker exec -it bbox1 ping -c 2 bbox3

PING bbox3 (10.10.10.10): 56 data bytes

64 bytes from 10.10.10.10: seq=0 ttl=64 time=3.447 ms

64 bytes from 10.10.10.10: seq=1 ttl=64 time=1.899 ms

--- bbox3 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.899/2.673/3.447 msbbox4 ping bbox2/bbox3

ping不同就正常了

[root@server2 ~]# docker exec -it bbox4 ping -c 2 bbox2

ping: bad address 'bbox2'

[root@server2 ~]# docker exec -it bbox4 ping -c 2 bbox3

ping: bad address 'bbox3'查看不同overlay的网络命名空间

server1

[root@server1 ~]# ln -s /var/run/docker/netns/ /var/run/netns

[root@server1 ~]# ip netns

6b6eda831126 (id: 4)

1-fd4f5a8769 (id: 3)

a84b830b145d (id: 2)

1-fffcacc4f9 (id: 1)

c311683c948b (id: 0)server2

[root@server2 ~]# ln -s /var/run/docker/netns/ /var/run/netns

[root@server2 ~]# ip netns

78e0b21c4f14 (id: 3)

1-fffcacc4f9 (id: 2)

ea7d77b95e76 (id: 1)

1-fd4f5a8769 (id: 0)观察两台主机的相同编号的命名空间

1-fffcacc4f9和1-fd4f5a8769

相同voerlay能够通信就是因为各自在各自的网络命名空间中,而bbox1能够与net2中的容器通信,也是因为加入了net1和net2网络的命名空间

查看网络命令空间确定哪个空间是哪个overlay网络使用的

查看server1命名空间

[root@server1 ~]# ip netns exec 1-fd4f5a8769 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 4e:b4:bb:d6:8a:f2 brd ff:ff:ff:ff:ff:ff

inet 10.10.10.1/24 brd 10.10.10.255 scope global br0

valid_lft forever preferred_lft forever

17: vxlan0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN group default

link/ether 4e:b4:bb:d6:8a:f2 brd ff:ff:ff:ff:ff:ff link-netnsid 0

19: veth0@if18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UP group default

link/ether e6:30:0e:11:12:1d brd ff:ff:ff:ff:ff:ff link-netnsid 1

23: veth1@if22: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UP group default

link/ether c2:9a:b6:eb:42:cf brd ff:ff:ff:ff:ff:ff link-netnsid 2通过查看发现1-fd4f5a8769命名空间中的br0的ip为10.10.10.1/24,net2网络的网段就是我们手动指定的10.10.10.0/24,所以该命名空间是net2网络的

| 命名空间1-fd4f5a8769网卡 | 作用 |

|---|---|

| 2: br0(10.10.10.1/24) | 用作连接net2网络中所有容器(命名空间网关) |

| 17: vxlan0@if17 | 用作不同主机的命名空间通信 |

| 19: veth0@if18 | 用作与bbox2连接 |

| 23: veth1@if22 | 用作与bbox1连接 |

[root@server1 ~]# ip netns exec 1-fffcacc4f9 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 26:ea:5e:ba:21:90 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.1/24 brd 10.0.0.255 scope global br0

valid_lft forever preferred_lft forever

11: vxlan0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN group default

link/ether 8e:7b:93:14:c0:5c brd ff:ff:ff:ff:ff:ff link-netnsid 0

13: veth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UP group default

link/ether 26:ea:5e:ba:21:90 brd ff:ff:ff:ff:ff:ff link-netnsid 1通过查看发现1-fffcacc4f9命名空间中的br0的ip为10.0.0.1/24,net1网络的网段就是由consul自动分配的10.0.0.0/24,所以该命名空间是net1网络的

| 命名空间1-fffcacc4f9网卡 | 作用 |

|---|---|

| 2: br0(10.0.0.1/24) | 用作连接net1网络中所有容器(命名空间网关) |

| 11: vxlan0@if11 | 用作不同主机的相同命名空间通信 |

| 13: veth0@if12 | 用作与相同命名空间的bbox4连接 |

查看server2命名空间

[root@server2 ~]# ip netns exec 1-fd4f5a8769 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 16:e4:e7:bf:ae:76 brd ff:ff:ff:ff:ff:ff

inet 10.10.10.1/24 brd 10.10.10.255 scope global br0

valid_lft forever preferred_lft forever

7: vxlan0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN group default

link/ether 96:7b:91:ab:bd:82 brd ff:ff:ff:ff:ff:ff link-netnsid 0

9: veth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UP group default

link/ether 16:e4:e7:bf:ae:76 brd ff:ff:ff:ff:ff:ff link-netnsid 1在server1中这个命名空间是net2网络的,所以这个也不例外

| 命名空间1-fd4f5a8769网卡 | 作用 |

|---|---|

| 2: br0(10.10.10.1/24) | 用作连接net2网络中所有容器(命名空间网关) |

| 7: vxlan0@if7 | 用作不同主机的相同命名空间通信 |

| 9: veth0@if8 | 用作与相同命名空间的bbox3和bbox1连接 |

[root@server2 ~]# ip netns exec 1-fffcacc4f9 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 36:ae:b7:87:19:df brd ff:ff:ff:ff:ff:ff

inet 10.0.0.1/24 brd 10.0.0.255 scope global br0

valid_lft forever preferred_lft forever

13: vxlan0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN group default

link/ether 36:ae:b7:87:19:df brd ff:ff:ff:ff:ff:ff link-netnsid 0

15: veth0@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UP group default

link/ether ce:51:08:bc:15:50 brd ff:ff:ff:ff:ff:ff link-netnsid 1在server1中这个命名空间是net1网络的,所以这个也不例外

| 命名空间1-fffcacc4f9网卡 | 作用 |

|---|---|

| 2: br0(10.0.0.1/24) | 用作连接net1网络中所有容器(命名空间网关) |

| 13:vxlan0@if13 | 用作不同主机的相同命名空间通信 |

| 15:veth0@if14 | 用作与net1的bbox4连接 |

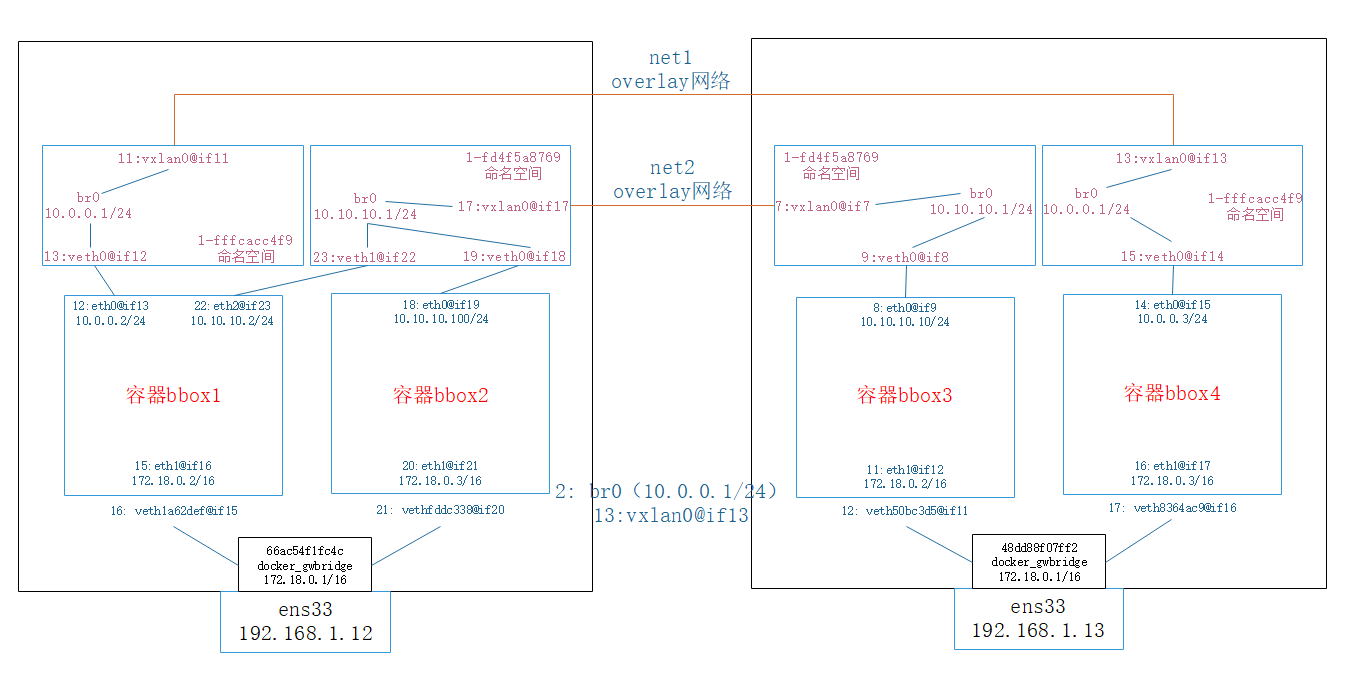

跨主机容器不同overlay网络访问梳理图

每个bbox通过自身与物理机相连的网卡桥接到doeker_gwbridge网卡进行外网通信

1.12的bbox1与1.13的bbox4进行通信时,通过bbox1的12@13网卡去到net1网络的命名空间中的br0与vxlan和1.13的相同命名空间中的vxlan和br0转交给与bbox4桥接的14@15网卡,完成通信

其他的理解同理